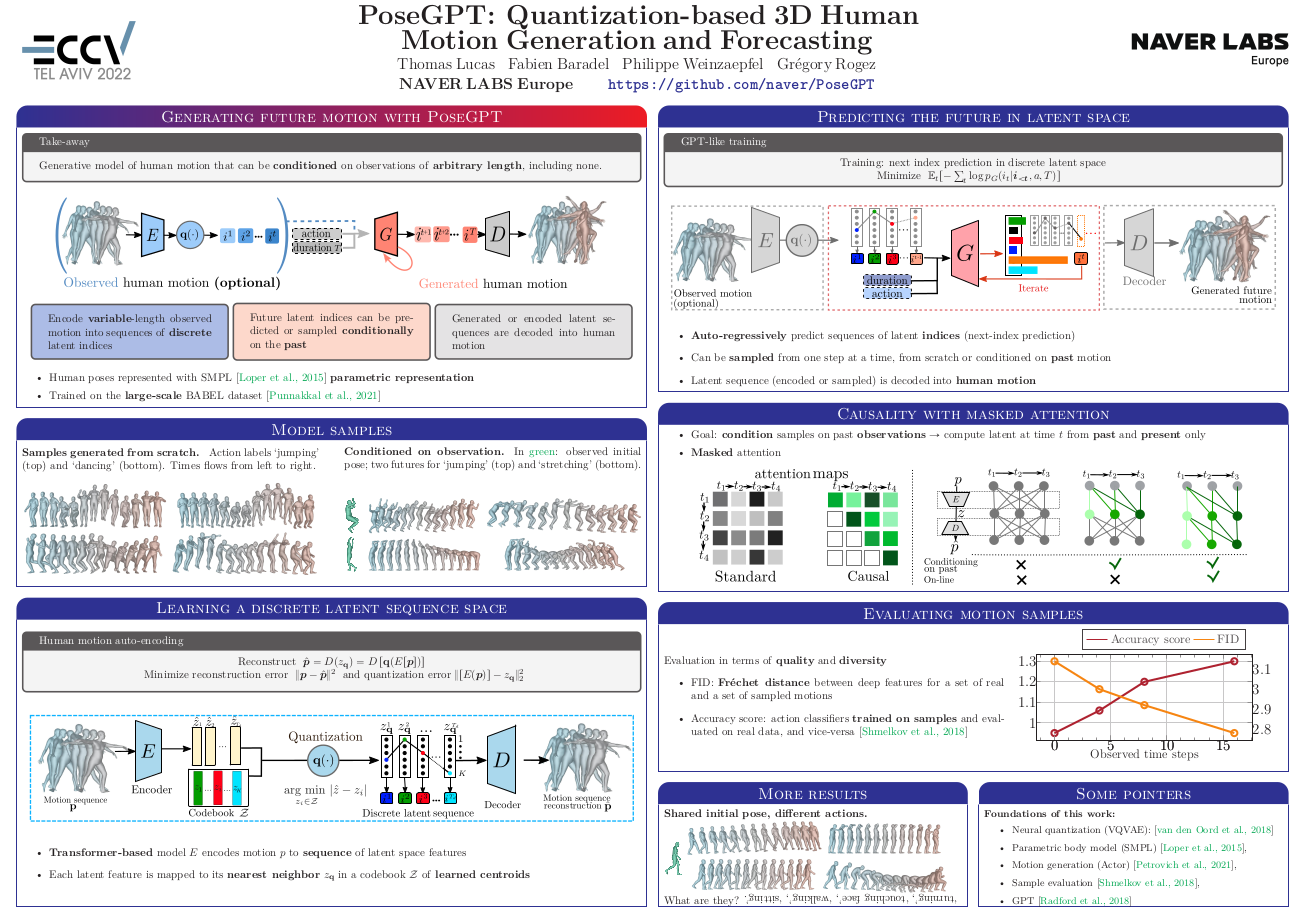

PoseGPT: Quantizing human motion for large scale generative modeling

Thomas Lucas, Fabien Baradel, Philippe Weinzaepfel, Gregory Rogez

Summary

Unlike existing work, we generate motion conditioned on observations of arbitrary length, including none. To solve this generalized problem, we propose PoseGPT, an auto-regressive transformer-based approach which internally compresses human motion into quantized latent sequences. Inspired by the Generative Pretrained Transformer (GPT), we propose to train a GPT-like model for next-index prediction in that space; this allows PoseGPT to output distributions on possible futures, with or without conditioning on past motion. We mainly experiment on BABEL, a recent large scale MoCap dataset.